"Alexa, start skill development"

Currently I hear / read everywhere "Voice Interaction is the future". I'm not totally convinced so far. But I'm pretty sure that is a market for apps made for voice interaction.

In this post I want to write about the challenges I faced developing my first own app for voice interaction.

If you are located in Germany feel free to test my skill "BilloBenzin" for free. Feedback is very welcome.

To get it click the following link:

> BilloBenzin @ Amazon.de <

I was curious how hard it is to develop an own skill (application for voice in the amazon-universe). After attending a full-day workshop with @muttonia I got a very good overview what needs to be done to develop / publish an own skill.

Especially for the german market it is attractive to develop skills, because there are not that many skills in the store so far (I think @muttonia mentioned roughtly 2.000 compared to over 1 million in the US).

I started to groom some ideas to develop my first own skill and decided to go with the usecase of cheap petrol. The goal was to develop a skill that should tell you the cheapest petrol station in your area.

To keep the scope small for my first development I only want to target areas within Germany (only released for the german skill-store). Furthermore I wanted to limit the interaction to a minimum but still useful - that means after the user gets the answer the skill is stopped (no real conversations, which would be a tricky topic at the moment anyways).

Below I will go through the things I learned and the challenges I had to face.

Concept behind Alexa on Echo, Echo Dot,...

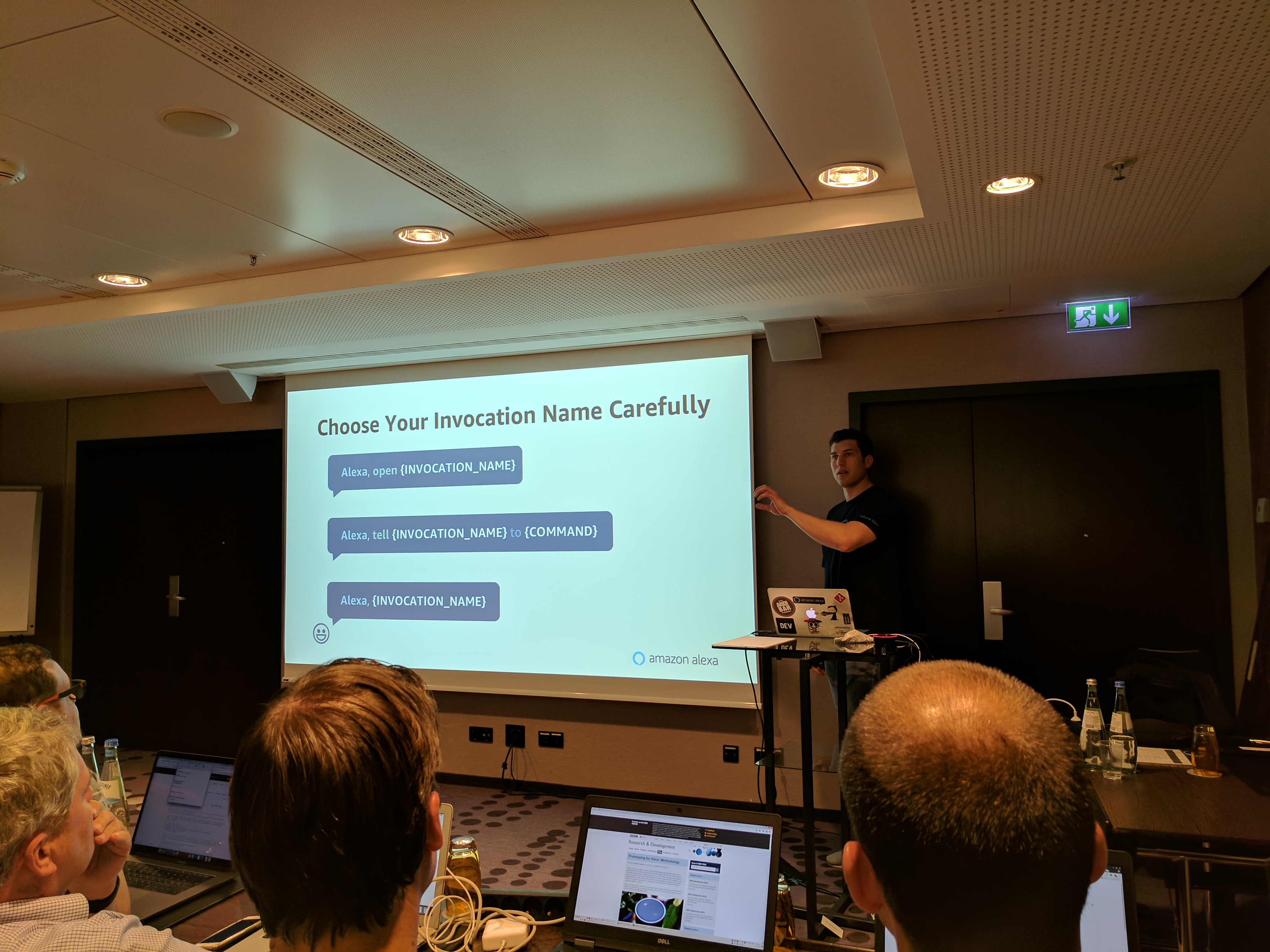

An Alexa-enabled device - I think that's how Amazon calls it - like the Echo or Echo Dot just acts as a bridge to cloud-functions. It permanently listens for a keyword like "Echo", "Alexa" or "Computer" followed by a command, the skill-invocation name and optionally a phrase containing some varible inputs (there is also an "inversion" of the sentence structure that works, see the invocation picture below).

Everything after the keyword "Alexa" is basically the intent, which is transformed via speech-to-text and send as a JSON file to the cloud . There it is processed by an AWS-Lambda-Function (or an alternative cloud-function). The result is returned back as a JSON to the Alexa-enabled device where a text-to-speech transformation is applied. The result is vocalized to the user.

Invocation-name and interaction model

To get the expected results you really need to care about the invocation-name, the syntax of your invocation sentences, the parameters (Intent-Slot-Types) and the error-handling.

invocation-name

The invocation-name is very tricky, especially in the german language if you want to come up with a nice syntactical meaningful phrase. You can only define one invocation-name (does not need to be the same as your skillname) - so be careful here.

During my development / review-process I had to change the invocation-name 2 times due to the following reasons:

- My first invocation-name was "benzinpreis" (means petrolprice) and I wanted to use it in combination with the command "erfrage" (means ask). That could give me some nice natural sounding german phrases like "Alexa, erfrage Benzinpreis" or "Alexa, erfrage Benzinpreis für Diesel in Frankfurt" - which would be pretty neat because the phrases are short and contain all the necessary inputs.

This invocation-name was rejected in my first certification by Amazon because "erfrage" is not an allowed/official command for Alexa even if it is perfectly recognized all the times - most likely because it sounds similar to "frage" which is officially supported. - My second invocation-name was "billobenz" (just a catchy name). This one I had to change, because Alexa seems to have some problems with the german language. In most of the cases "billobenz" was understoud by Alexa as "below bands" and than it could not handle it / find a skill matching "below bands".

- I ended up with "billobenzin" (catchy name, means something like "cheapypetrol). This one is perfectly recognized, but it makes meaningful invocation-phrases longer, e.g. "Alexa, frage BilloBenzin nach dem günstigsten Diesel Preis in Frankfurt".

intent-scheme

The intent-scheme is a JSON-File that defines the intents you (can) handle in your cloud-function and what parameters (so called Slots) they take as an input.

Below you can see a definition of 2 intents - one custom and the standard HelpIntent. As you can see the custom intent defines 2 input-slots.

In the intent-scheme you can define more intents than you currently resolve / handle in your cloud-function. This allows you to adjust the cloud-function while your skill is live without a new review process. I would not recommend to do this, because if you do not predefine sample utterances for your unimplemented intents they will most likely not be recognized. If you add sample utterances for unimplemented intents you will get problems if the user starts such an intent, because you do not handle it.

If you really want to go with that I highly recommend to at least provide a stub implementation in your cloud-function that tells the user that this intent will be handled / implemented later and route her to the help-intent. But: This is not a good UX!

{

"intents": [

{

"slots": [

{

"name": "city",

"type": "AMAZON.DE_CITY"

},

{

"name": "petrolType",

"type": "PETROL_TYPE"

}

],

"intent": "GetCheapestPetrolForCityWithPetrolTypeIntent"

},

{

"intent": "AMAZON.HelpIntent"

}

]

}

slot-types

Slot-Types define the input-"words" that should be recognised for an intent. You have to give your input a type / a list of values that Alexa knows / recognises. In the US you can use Literal-Type too, which is any input with the consequence that you need to provide a lot more sample utterances to make it close to deterministic. For german skills Literal-Type is not supported.

In the JSON-codesnipped above you see two of my slots for a custom-intent. petrolType has a custom slot-type. city uses a predefined type "AMAZON.DE CITY" which I extended. It contains basically a list with 5.000 german city-names. It was a challenge for me to find the right type for city, because first I wanted to use something like a Literal for free input, because the backend that resolves the area also supports streets, zip-codes, e.g. I wanted to handle any address input all at once. I had to change my way of working / thinking here and needed to be more explicit. So I decided to use the predefined type "AMAZON.DE_CITY" which covers about 5.000 cities. Unfortunately I had to find out that not all Cities I tested are covered. E.g. "Nossen" (the town where I lived the first years of my life) is not recognised. So I had to extend the type. Unfortunately there is an uncertainty that I did not add a city a customer will ask for. That is a drawback of this type.

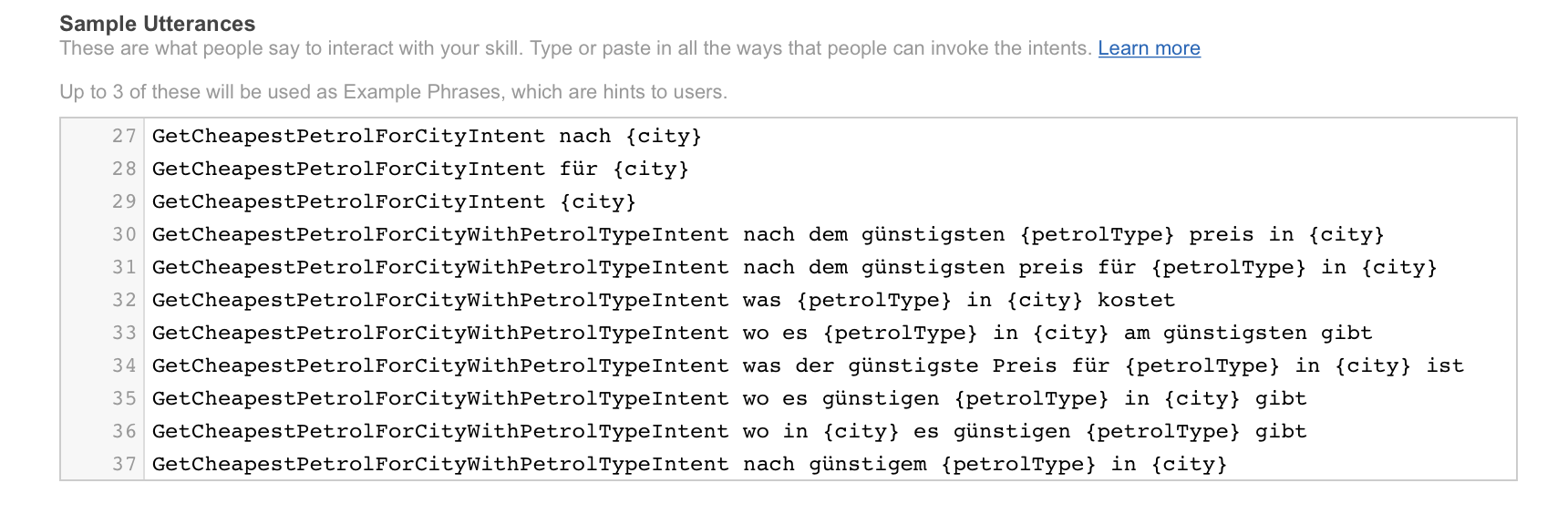

syntax

You should support the machine-learning algorithms of Amazon with a lot of Sample-Utterances how to invoke your skill - there you also define which element of the phrase is a parameter / slot you want to handle in your cloud-function (see examples of my skill in picture below).

In the Alexa workshop it was recommended to use at least 40 samples. The more you provide the better the intention of the user is recognised.

AWS

I know the concepts of serverless-computing and lambda-functions in the cloud, but I never had the chance to work with them before.

Amazon offers developers everything you can think of as a cloud-based service that you paid only on a usage / time / request base. For Alexa-skills the integration is made very easy for you because Amazon already gives you Alexa presets for a quick start. It is highly recommended to use AWS.

My skill uses the following AWS-Components: A Lambda function based on the alexa-skill-kit-sdk-factskill written in Node.js / Javascript, a DynamoDB and S3 for Skill-Graphics. Using all these components from Amazon makes it easy and fast to develop your skill. But it comes with the risk of a vendor-lock-in.

I would highly recommend to use a lambda function based on the alexa-skill-kit-sdk-factskill template (for the start) because it includes already the alexa-sdk, so you can directly start developing your intent-handlers.

It is also quite handy to use a DynamoDB for persisting the state of your skill. In my skill e.g. I store the petrol-type and the city the user asked for. So in future requests for the cheapest price she can take the shortcut in just asking Alexa to open the skill and it will respond with the latest price for the remembered city and petrol.

Your AWS-Lambda function has a unique identifier that can be directly connected to your skill in the Amazon Developer console (Alexa) (see example in picture below).

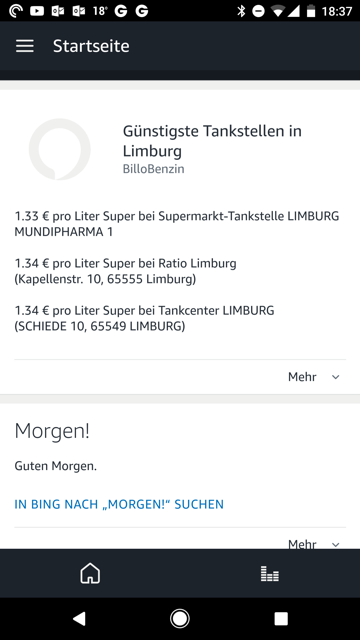

Provide Cards in your skill

Amazon offers the functionality to put detailed / additional information on so called cards that appear in the Alexa-App (and maybe on the Echo show?). In my skill Alexa just responds with the one and only cheapest petrol-station in speech but I show the Top 3 with additional information in a card (see screenshot below).

Unit-Testing a skill

Unfortunately I didn't try it so far. But it is definitely on my list because the bigger your skill gets the more tricky it gets to test it manually.

As a start I recommend reading the following blog-post: Unit-Testing an Amazon Alexa Skill.

Certification / review process

I didn't pass the certification of my skill two times. But most of the review comments really helped me to improve my skill and make it more robust.

I recommend to read through the Submission Checklist because it is pretty helpful.

Skill propagation

That's something I also have to figure out. How to attract potential customers with your skill? Currently they only can search for specific keywords in the skill-store and will find several skills fulfilling the same usecase. I think you need a lot of unique users to get featured by Amazon. I hope to find this out during the lifetime of my skill. I will update this post accordingly.

Any tips in this direction are highly welcomed.

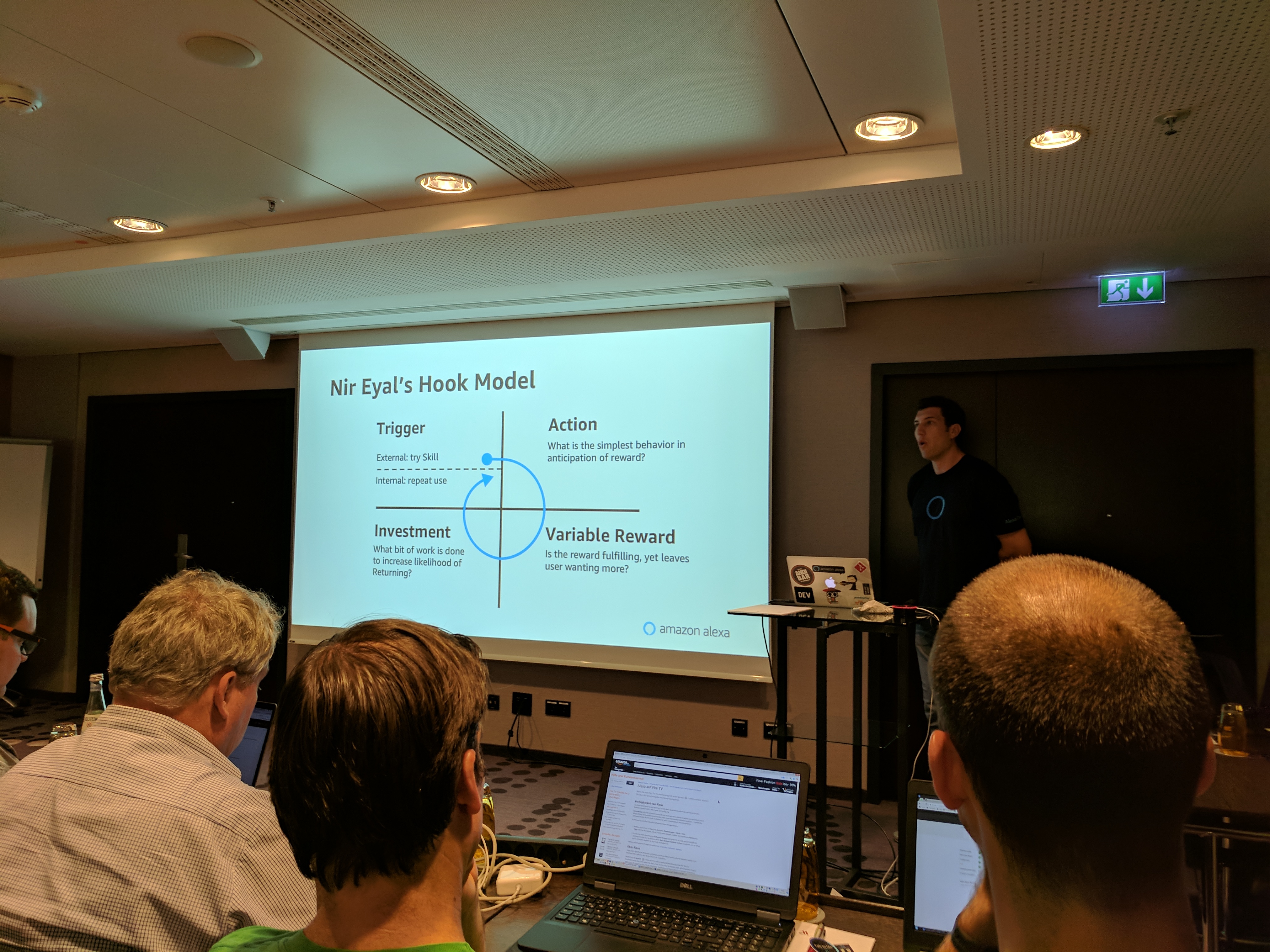

Attract customers through behavior - Nir Eyal's Hook Model

Nir Eyal is a Silicon Valley based product designer 👨🎨 who came up with the Hook Model / Habit-forming products in his bestseller book Hooked: How to Build Habit-Forming Products.

I just want to go through the 4 aspects shown in the picture above in quick to describe how my skill (not) addresses them.

- Trigger The actual trigger that leads the user to use the skill. An external trigger could e.g. be that the user has seen the Skill-Icon and the good reviews in the store and wants to try it out herself. After the first use she repeats to use the skill (internal trigger).

- Action The user should be able to interact with ease and according to human behaviour. In the case of my skill this would be short invocation sentences in a natural, familiar german syntax.

- Variable Reward The skill should vary its responses to sound more natural. You could e.g. define several answer-templates and choose one randomly. This motivates the user to keep on using the skill and get to hear the other answers. This variation differentiates Nir Eyal's Model from traditional feedback loops. I currently do not vary the answers in my skill (besides the actual price-infos) but will encourage this in one of the next updates.

- Investment The more the user invests into the product (money, time, information) the more she values it. For my skill the user has not that much information or money she needs to invest. The only investment is the time she uses the skill. And maybe the precision of the inputs to get more tailored answers.

In complex skills like role-playing games the user can build an inventory and gets more and more knowledge of the game. She invests time to bring the game to an end and that's an investment.

This was just a quick outtake. I highly recommend to read the book of Nir Eyal. Your future app-developments will profit from it 😉

Useful Links

- Alexa Node Skill SDK on Github as a reference how to implement things

- AWS Console for cloud-functions

- Amazon Developer Console to create and test your actual skills

- Echosim.io - simulates an Echo in to test your skill with voice for the times you have no real echo in reach (seems to work only in Firefox)